CoSeRNN

Created: 2022-06-24 09:30

#paper

Main idea

In music recommendations that are several challenges that are different to the ones we can encounter in other fields -> tracks are short, listened to multiple times, tipically consumed in sessions with other tracks and relevance is highly context-dependent.

A way to deal with this aspects is presented in this paper -> modeling users' preferences at the beginning of a session, considering past consumptions and session-level context (like time of the day or the device used to access the service).

The starting point is a vector-space embedding of tracks, where two tracks are close in space if they are likely to be listened to successively. A user is modeled as a sequence of context-dependent embeddings, one for each session. A variant of a RNN is trained to maximize the cosine similarity between its output and the tracks played during the session, given the current session context and the representation of the user's past consumption.

So, his models learns user and item embeddings separately not jointly like most of other models -> this can help in improving the modularity of the model, since we could change or add components.

Recap: this model does not predict the individual tracks inside a sesion; instead it generates a session-level user embedding and relies on the fact that tracks will lie inside a small region of the space. Furthermore, representing a session as an embedding overcome the fact that predict every individual item in a session becomes intractable.

The model takes inspiration from the approach of JODIE, described in JODIE-Predicting Dynamic Embedding Trajectory in Temporal Interaction Networks.

In deep

Session-level information

Information collected is about:

- the set of tracks played during the session;

- which tracks were skipped;

- the stream source (user playlist, top charts etc);

- a timestamp representing the start of the session;

- the device used to access the service

Notation:

- -> Day of the week;

- -> Time of the day;

- -> device;

- -> number of tracks in session;

- -> time since last session;

- -> stream source;

- -> session embedding, all tracks;

- -> session embedding, played only;

- -> session embedding, skipped only

The session embeddings are 40-dimensional.

Embeddings

Tracks are embeddded using word2vec on a set of user-generated playlists.

A session is represented as an average of the embedding of the tracks it contains -> it is a summary of the session's content.

It is needed to say that for long sessions it is not clear if context stays constant throught its duration -> the authors keep just the first 10 tracks, i.e. they are predicting just the beginning of the session.

Architecture

For the session with index t, the predicted session-level user embedding is defined as and the observed session embeddings as . The model has to maximize the similarity between and .

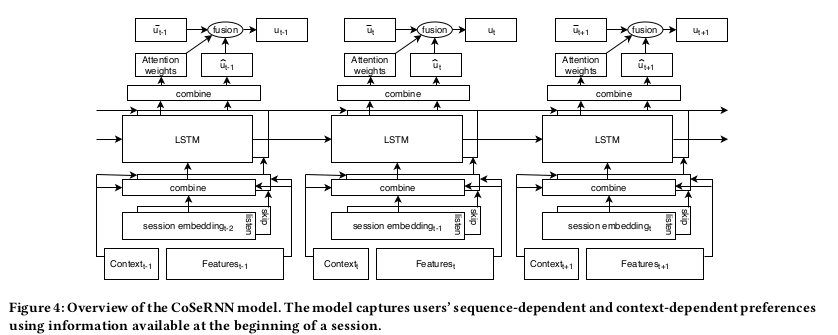

CoSeRNN uses features from the current context and features about the last session as input to two RNNs, representing play and skip behavior. The RNNs combine the input with a latent state to capture user's consumption habits. The outputs of the two RNNs are then combined and fused with a long-term user embedding.

Input layers: the two features vectors are defined as follows: and , where is a concatenation of one-hot encodings of the contextual variables , and ( stands for concatenation). Prior to passing the features to the RNN, we apply a learned nonlinear transformation to help the RNN to better focus on modeling latent sequential dynamics. The transformation is (same for ).

The RNNs are LSTM models and are defined as: where is the output and is the hidden state.

To combine the outputs of the two RNNs we do: and the we can get the user embedding as .

Long-term user embedding is defined as a weighted average of all the previous session embeddings: normalized such that . To fuse and the authors used attention weights based on the RNN output. The final user embedding is produced as where .

Loss function

The model is trained to maximize the similarity between the predicted embedding and the observed one .

Given the training set D composed of pairs (i,t) where i is the user and t the session, the loss function is defined as where and refer to the t-th session of user i. The loss is minimized using stochastic gradient descent with mini-batches and Adam optimizer.